Verification & Validation of Machine Learned & AI Models

Machine Learned and AI models degrade over time in production. As the adoption of these models steadily increases, this problem is becoming so evident that some scientists, such as Dr. Allen from Rice University, are raising awareness of a reproducibility crisis especially in the biomedical research field causing significant waste in time and money.

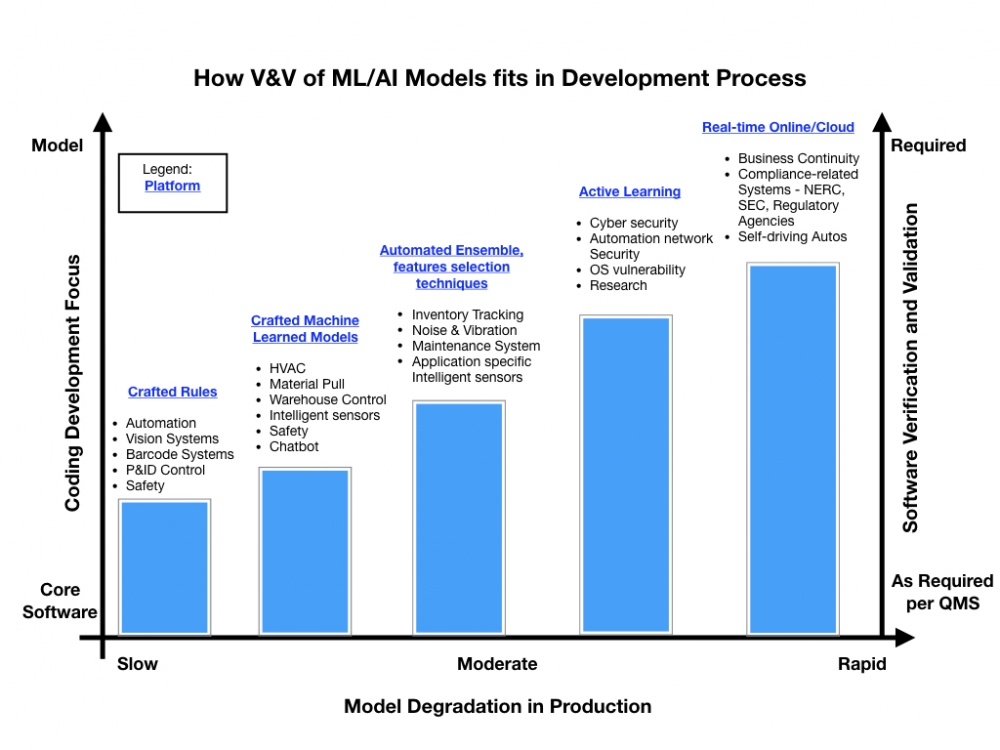

The chart below was put together to help explain model vulnerability to degradation in various types of applications. In reducing the risks involved due to the degradation of the ML/AI model and thus making the model certifiable, there are two focus areas to consider: 1) The platform running the model and; 2) the requirement for verification & validation of ML and AI models. The first being a technical aspect leading to cost implications and the second dealing more with the responsible development as mandated by ethical engineering practices, quality requirements and key values such as those set out in "The Montreal Declaration for a Responsible Development of AI".

Further elaboration on those two focus areas is required.

Platforms: How quickly a model degrades depends on the application and the problem the model is designed to solve. Putting a platform in place to support it is key to ensuring the model can be tuned to suit the purpose it is meant to serve. Compare, for example, platforms for a vision system and a cyber security system. The model for a vision system is programmed by a set of crafted rules, tested for serving its purpose and is for the most part static from that point on. In contrast, the platform supporting a model ensuring protection against cyber attacks that needs adjustment and frequent tuning will need to support active supervised and unsupervised learning capabilities to stay relevant. A static cyber security model (one based on a limited set of rules) would degrade very quickly, require constant dataset updating and redeployment leading to wasted time and money.

Verification & Validation (V&V): Meeting the requirements of a quality management system (QMS) and incorporating good engineering control practices are the second key. Surprisingly absent from public ML/AI development lifecycle frameworks, V&V is the task which users need to consider seriously when model degradation is a concern. Performing V&V, especially on models that will run on more sophisticated platforms, is not only favourable from an effort/cost perspective but can also provide safety benefits namely in autonomous vehicles applications. On the right of the chart - vertical axis - we find that as the code for the ML/AI algorithm becomes important to the ML/AI system and surrounding infrastructure so does the requirement for performing V&V.

With all implementations of ML/AI, it is important to thoroughly define the requirements early in the project to ensure a high quality model is delivered to the user. A good Data Science Lifecycle shows model evaluation including cross-validation, model reporting and A/B testing but a great one will include provisions for the Platform requirements and thoughtful V&V testing leading to a higher quality and certifiable ML/AI model.

Thanks.

Peter Darveau, P. Eng.

References:

[1] Darveau, P. Prognostics and Availability for Industrial Equipment Using High Performance Computing (HPC) and AI Technology. Preprints 2021, 2021090068 .

[2] Darveau, P., 1993. C programming in programmable logic controllers.

[3] Darveau, P., 2015. Wearable Air Quality Monitor. U.S. Patent Application 14/162,897.